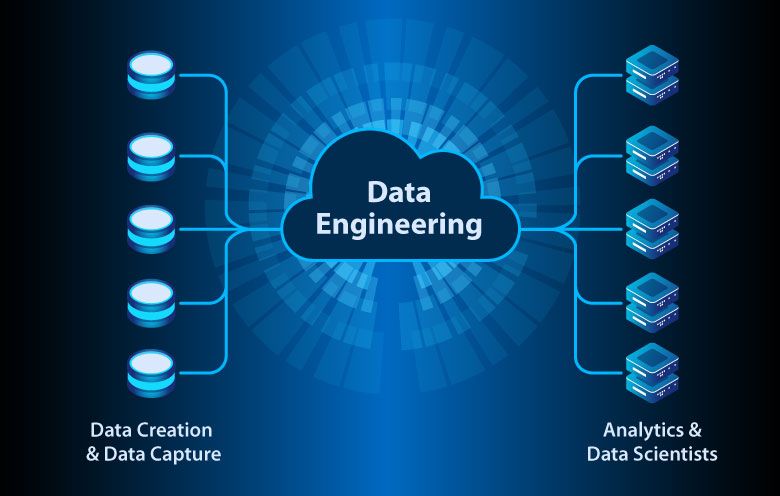

Data engineering is a field within data science and computer science that focuses on the practical application of data collection, processing, storage, and analysis. It involves designing, building, and maintaining systems and infrastructure that enable the efficient and reliable handling of large volumes of data.

Data engineers work with various tools and technologies to develop data pipelines, which are workflows that automate the movement and transformation of data from its source to a destination where it can be analyzed or consumed by other applications. These pipelines often involve extracting data from multiple sources, cleaning and transforming it into a usable format, and loading it into data storage systems such as databases, data warehouses, or data lakes.

Some key tasks and responsibilities of Data Engineers include

- Data ingestion: Collecting data from different sources such as databases, files, APIs, and streaming platforms.

- Data transformation: Cleaning, enriching, and transforming raw data into a format suitable for analysis or storage.

- Data storage: Storing data in appropriate storage systems, considering factors like scalability, performance, and cost.

- Data processing: Performing batch or real-time processing on data to derive insights or feed downstream applications.

- Data quality and governance: Ensuring the accuracy, consistency, and reliability of data through validation, monitoring, and governance processes.

- Infrastructure management: Managing and optimizing the infrastructure required to support data processing and storage, including cloud services, databases, and distributed computing frameworks.

Data engineering is essential for organizations that deal with large volumes of data and rely on data-driven decision-making. It enables businesses to extract value from their data assets by providing the foundation for analytics, machine learning, and other data-driven applications.